Please select your location and preferred language where available.

Do GPUs Alone Enable Superior Generative AI? Exploring the Secrets Behind the ‘Memory’ that Elevates AI Thinking

Nobember 20, 2025

When you ask generative AI a question, it answers quickly and clearly. It is often explained that generative AI is extremely knowledgeable because it is trained on a massive amount of data. While it is possible to increase performance by continuing to train AI, it is not simple to achieve on a practical level.

Although some may think that acquiring more advanced GPUs is the sole solution, it is not the only factor impacting AI performance. Additional variables must be considered in AI development and usage, including the hardware processing power, the quantity and quality of the data AI is trained on, and the quality and verifiability of the AI output.

With more companies expanding beyond cloud-based AI solutions, like ChatGPT, and introducing in-house generative AI capabilities, it’s helpful to revisit how generative AI works. To explore this topic further, we spoke with Koichi Fukuda of Kioxia Corporation (Kioxia), a major Japanese semiconductor manufacturer and leading producer of NAND flash memory. We asked him to explain the basics of generative AI from the perspective of a flash memory technology professional who has worked on storage products for over 30 years.

“Generative AI is a collection of data,” and once you understand the basics, you will see how to use it

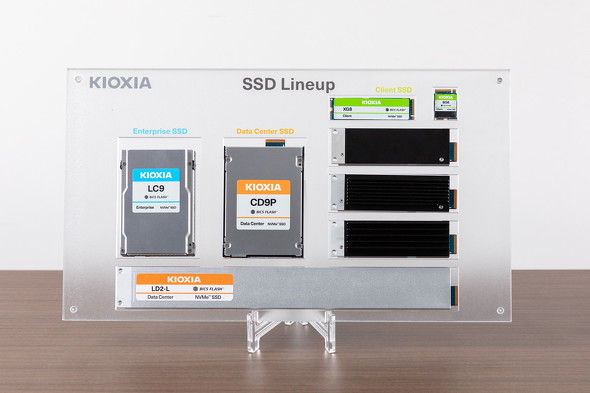

Kioxia originated from Toshiba's semiconductor memory business, and currently designs and manufactures products such as flash memory and SSDs. The NAND flash memory invented in 1987 by Kioxia is highly regarded for its data storage capacity, write speed, power-saving performance, and more, and is widely used in electronic devices such as digital cameras and smartphones.

Kioxia's Koichi Fukuda explains, “Kioxia regards the emergence of generative AI as a golden opportunity. AI is ‘a collection of data’. While GPUs are attracting most of the attention in the development of AI technology, the storage that is responsible for enabling AI’s ability to ‘remember’ is an element that cannot be ignored.”

It is easy to forget when interacting with AI tools via chat that AI is made up of data. The large language models (LLM) that underpin generative AI are created using massive amounts of information from the Internet, as well as individual organizations. They read a diverse set of data including web sites, books, papers, videos, images and more to accumulate knowledge, statistically learn how words are connected, and analyze contextual patterns to produce human-like intelligent behavior.

The Llama 3.1 open-source LLM released by Meta in 2024 boasts an impressive 405 billion parameters. It is estimated that the number of parameters in the GPT-4 model that powers ChatGPT exceeds an astounding one trillion. In general, larger numbers of parameters lead to more powerful LLMs. The volume of data used to train Llama 3.1 was over 15 trillion tokens. Since 100,000 tokens is roughly equivalent to one book, the magnitude of data used in AI training is surprising to most people.

LLMs are built by ‘training’ on data. When we interact with an AI, the LLM is performing a type of background processing called ‘inference’. When producing output in response to a user's instructions, it derives an answer based on learned knowledge and patterns.

“The GPUs handle the calculations necessary for the training and inference. High-performance GPUs are critical for shortening the time spent on training and inference, as well as building larger models. It is against this backdrop that the demand for GPUs is increasing,” said Fukuda.

Even if one were to continue to increase GPU performance, there is no guarantee that it would create generative AI that is useful for business. AI is knowledgeable because it derives the appropriate answers from a massive amount of training data. To look at it another way, it cannot answer questions about material that it has not trained on. Even if you ask a general-purpose AI, “What are the work rules for this company?” it will not produce the correct answer. Additionally, if you ask an LLM created in 2024 about the international situation or economic trends in 2025, it cannot respond with the latest information.

A technology called RAG (Retrieval-Augmented Generation) is starting to take root as a way to solve this issue. By referencing external sources during inference, the LLM supplements its knowledge with company-specific data and the latest information that it has not trained on. For example, an organization could create a custom AI chatbot using internal company rules or build a legal affairs AI tool by preparing data sources that combine patent information and laws.

In addition, a prompting technique called Chain-of-Thought, which increases response accuracy, has recently emerged. Since generative AI tends to struggle with complex tasks, this technique breaks a single task into several steps to obtain the appropriate output result through step-by-step reasoning.

AI is changing the power structure in the storage market

“Whether you are conducting AI training, inference, or RAG, the key aspect is the data,” according to Fukuda. “If we regard the GPU as the ‘brain’ of AI, then no matter how fast that brain operates, it will not produce superior thinking without knowledge. The ‘memory’ that retrieves and stores the data that forms the basis of thought and rapidly pulls it out when needed, impacts AI's response accuracy and answer quality. The element that is responsible for this ‘memory’ is storage.”

AI performance greatly depends on the quantity and quality of the training data. Even if you collect 15 trillion tokens of data, as in the case of Llama 3.1, the question of how to store and use that data is a challenge. To date, data centers often used HDDs for storage. Because HDDs have been perceived to offer reasonable cost performance due to their high capacity and low cost, HDDs have frequently been the default choice for many infrastructure managers. However, Fukuda explains that they are not quite meeting the needs of the AI era.

“HDDs physically rotate a magnetic disk to read and write data, so there are limits to the data access speed. They cannot keep up with the data supply speed required by GPUs. Therefore, an increasing number of companies are adopting SSDs for their AI infrastructure storage.”

HDDs read data in the order that it was recorded, like a vinyl record. Because SSDs record data electronically, they have high ‘random read’ performance that directly accesses the location where the data is stored. Fukuda explains, “While SSDs are generally more expensive, the data read performance of SSDs can be magnitudes higher, up to 10,000 times faster or more.”

“The price of a single high-performance GPU server can run from several million to tens of millions of yen. When the data supply speed is slow, you will encounter a situation where you cannot perform the calculations because the data has not been sent, and you will be unable to utilize the full capabilities of an expensive GPU. Choosing SSDs to resolve data-related bottlenecks and maximizing performance without leaving the GPU idle is a wise decision.”

In addition, the gap between SSDs and HDDs is narrowing when viewed in terms of TCO (total cost of ownership), which includes electricity and maintenance costs. SSDs consume less power and have lower failure rates compared to HDDs. When comparing devices with the same capacity, SSDs can be miniaturized to a greater degree, which saves space. This benefit also makes SSDs a viable option.

Answering NVIDIA's challenge with the “100 Million IOPS SSD”

Leading AI semiconductor company, NVIDIA, is also keenly aware of the importance of data storage. In late 2024, the company presented its vision for SSDs that would support next-generation AI systems. Fukuda recalls that the requirements were shocking to the storage industry.

“The requirements presented by NVIDIA would increase the current random read performance of SSDs by more than an order of magnitude. This means that the IOPS (Input Output Per Second), which indicate the number of read and write operations per second, would increase from around 10 million to 100 million IOPS. This is an extremely ambitious goal for storage developers, including Kioxia.”

In response, Kioxia announced its ‘Super High IOPS SSD’ concept, which exceeds 10 million IOPS, at its management policy briefing in June 2025. Masashi Yokotsuka, Managing Executive Officer, Vice President, SSD Division, at Kioxia stated, “we will begin shipping samples of an unprecedented SSD to support AI in the latter half of 2026.”

Kioxia is aiming to achieve early implementation of the Super High IOPS SSD by applying its ‘XL-FLASH’ flash memory technology which it has been developing for many years. XL-FLASH is a type of flash memory that substantially reduces delays in data read and write operations.

“With AI utilization gaining momentum, random read performance is attracting attention, and we have reached the phase where we can apply a technology that previously could not demonstrate its true value. We are confident about our ability to develop 100 million IOPS SSDs because we have the XL-FLASH technology,” said Fukuda.

Solving AI challenges with storage

A hardware-agnostic approach

Kioxia's efforts are not limited to hardware. The company has released the data search software ‘KIOXIA AiSAQ™’ (AiSAQ) as open source and is supporting the advanced utilization of vector databases used in RAG.

Vector databases convert and store the meaning and context of data such as words and sentences into a set of numerical values called ‘vectors’. Preparing a vector database enables you to search for ‘data with a high degree of relevance and similarity’ that cannot be revealed using only keyword search results. For example, searching for the word ‘read’ would derive results including ‘books, newspapers, and letters’ and less likely to generate sentences with low relevance such as ‘reading wine.’ The use of vector databases as a mechanism when referencing external information with RAG is increasing.

Vector databases are often handled using a type of memory called DRAM (Dynamic Random Access Memory). DRAM is able to read and write data at extremely high speeds, which makes it well suited for real-time processing scenarios like AI response generation. However, DRAM is expensive and has low capacity, which could hinder the expansion of the RAG market.

“AiSAQ was developed to handle larger sets of data than DRAM by migrating vector databases to SSDs. Through the development of AiSAQ, we hope to not only contribute to the development of AI, but also understand the challenges at the front lines of AI research,” said Fukuda.

The development of AiSAQ has led to conversations with partner companies and revealed the real concerns of the business world. Challenging issues include how to efficiently update vector databases with data that is updated daily and how to handle simultaneous access by many users.

Fukuda added, “Deeply understanding the issues in the field reveals the level of performance that SSDs need to have. We then feed that knowledge back into the hardware development. This cycle makes us stronger.”

As AI development searches for the solution, “We want to lead the way”

On one occasion, Fukuda asked the hyperscalers, “What will happen to SSDs as AI advances?” The answer he received from the hyperscalers, who are at the forefront of AI development, was that they don't know and would like SSD developers, such as Kioxia, to tell them.

“The hyperscalers are also searching for the solution. We want to personally participate in discussions to solve problems and lead the way in finding a path forward. NVIDIA's challenging requirements are a golden opportunity for us.”

GPUs are the star attraction of the generative AI boom. However, as flash memory, which supports AI performance from behind the scenes, increases in importance, it won't be long before storage takes center stage. When that happens, Kioxia's efforts will surely come to fruition.

Company names, product names, and service names may be trademarks of third-party companies.

Reprinted from: ITmedia NEWS

Reprinted from the August 8, 2025 edition of ITmedia NEWS

This article was reprinted with permission from ITmedia NEWS.