Please select your location and preferred language where available.

Co-creating with AI: The Future of AI in the Field of Creativity

Part 1: How NVIDIA and Kioxia Used AI to Create a New Manga

April 25, 2022

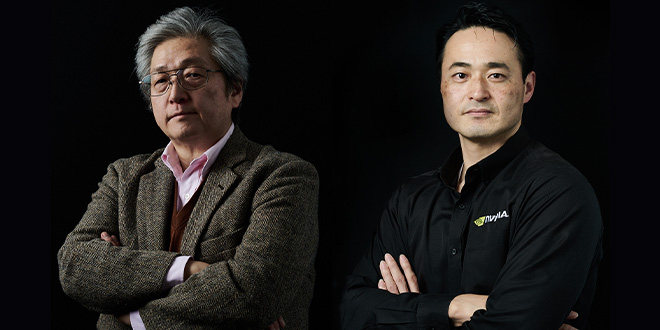

The Tezuka 2020 project brought together AI technology and manga artists with the goal of creating a brand new manga in the style of Osamu Tezuka, one of Japan’s most beloved manga artists. By training a machine using data from Tezuka’s manga, a team of Kioxia engineers and other members created an AI that is able to automatically generate character designs and plotlines. These AI-generated designs were then polished by manga artists to bring the story and art to completion. Kioxia’s Ryohei Orihara and NVIDIA’s Takeshi Izaki discuss their roles on the technical side of this exciting project, part of the first phase of Kioxia’s Future Memories campaign.

Tezuka 2020: An AI-Generated Manga

Osamu Tezuka is considered one of the greatest manga artists of all time. If he were alive today, what stories would he tell? This is the question that inspired the groundbreaking Tezuka 2020 project, which successfully provided one answer through a collaboration between AI and humans. Kioxia’s Ryohei Orihara, who spearheaded the project, looks back on how it all began.

Orihara: Tezuka 2020 began as a way to promote the AI technologies that we at Kioxia use in our work. As we were thinking about what to do in the first six months of Kioxia launching the Future Memories campaign, we learned that February 9, the anniversary of Osamu Tezuka’s death, was considered Manga Day. So we thought that announcing a project having to do with manga would be a poignant way to mark the occasion.

We consulted with many people along the way, including Dr. Hitoshi Matsubara of the University of Tokyo’s Next Generation Artificial Intelligence Research Center, Dr. Satoshi Kurihara of the Faculty of Science and Technology in Keio University’s Department of Industrial and Systems Engineering, and Dr. Kazushi Mukaiyama of Future University Hakodate, as well as Macoto Tezka [the stylized spelling adopted by Osamu Tezuka’s son, Makoto Tezuka] and others from Tezuka Productions. Finally, I served as the project leader, with many engineers from Kioxia also participating.

──How did the project end up becoming an AI-human collaboration?

Orihara: As we were discussing all of the things that AIs are capable of doing nowadays, we realized that it would still be difficult for an AI to create a manga that is fit to sell. That’s why we decided to bring in human artists to collaborate with the AI. We could have gone about this collaboration in many different ways but decided on a format in which the AI would provide the plotlines and basic character designs, which would then be incorporated into a manga by human artists. The plot creation went relatively smoothly, but we ran into some stumbling blocks in terms of character design.

──How did you train the AI to reproduce Tezuka’s style?

Orihara: First, we extracted a large number of facial images from Tezuka’s manga and imported them as training data, then used a generative adversarial network (GAN) to create new character designs. Unfortunately, this did not go as planned. An engineer at Kioxia came up with the idea of using a model that was already capable of recognizing and generating faces. Rather than creating a character creation model from scratch, he proposed repurposing the model through what is called transfer learning—fine-tuning to solve a related problem. Interestingly, this engineer is not an expert in AI, but their approach was what worked in the end.

After that, we tried to train the model by using a lot of faces of anime characters, but one of the manga artists very astutely pointed out that this would train the model to reproduce the style of the animator, not that of Osamu Tezuka. And so we used a model trained on human faces as the basis of our engine, using transfer learning to apply its knowledge to Tezuka’s drawings. Since Tezuka himself would have drawn inspiration from real human faces when creating his characters, the idea seemed philosophically sound. We decided to use an NVIDIA model that had been trained on human faces, which is how NVIDIA became involved in the project.

Izaki: Yes. Dr. Kurihara was the first to contact me about using our StyleGAN model. I had been reading Tezuka since I was a boy, everything from Astro Boy to Black Jack, so I was honored to be asked. It was exciting to think that NVIDIA’s technology could be used for this project. At the time, GANs were all the rage in the world of AI for their ability to handle creative work. I was also intrigued that Kioxia wanted to use our model for a project related to manga, which I consider a unique part of Japanese culture. So I approached the NVIDIA head office to ask if we could get involved.

──What exactly was NVIDIA’s involvement?

Izaki: The source code for NVIDIA’s StyleGAN is publicly available on GitHub for anyone to use. Dr. Kurihara took that open-source algorithm and adopted it for Tezuka 2020. Because GitHub is an open source community, we could discuss aspects of the project with anyone in the community, whether they were engineers with NVIDIA or Kioxia or not.

Orihara: GitHub also allows you to report bugs and view other people’s discussions.

Izaki: That is the great thing about an open source community.

──What did you learn as a result of the project?

Orihara: Personally, I’m just glad it didn’t go up in flames! I was surprised that we got few negative feedback. A large part of that is thanks to the talented manga artists who brought the project to completion. I think it was good that Tezuka 2020 focused on creating manga similar to what Osamu Tezuka might have made, rather than creating an AI Osamu Tezuka.

Izaki: On the technical side, by introducing cutting-edge technology into manga culture, I think we were able to propose new creative possibilities in the genre, which I consider a major achievement. Also, as you pointed out, the resulting manga was embraced by the public just like any other manga, so I get the impression that it opened people’s eyes to a new way of thinking about AI. It helped AI become more democratized, more of an everyday thing. The manga is a collaboration between humans and AI. Since AI is just another tool for us humans to use, it is a good example of how we can use AI.

──Did any difficulties arise in terms of the AI-human collaboration?

Izaki: The characters created by the AI could not be used as is; they still needed to be tweaked by a human. From among a few different candidates, we selected a main character who has a bit of darkness in him. I think this is an interesting example of collaborative work—bringing in a human being to make the final choice and finalize the character.

Orihara: As for the plot, there were in fact many plot points that didn’t make any sense, but Macoto Tezka was very good at deciphering them. He created a story from things that seemed contradictory at first glance but were compelling when put together in the right way. Basically, the AI produced a random series of dots, which Tezka skillfully connected into an engaging story. I think the final result is actually more interesting because he connected dots that at first glance seemed too far apart to have any connection at all, rather than dots that were clustered together.

Nobody ever said it out loud, but when we started the project, everyone was wondering the same thing: If this project is successful, and people ask us about what exactly the AI had learned of Tezuka’s style, what are we going to answer? Maybe I was the only one, but I had no idea at first. I was hoping that if Tezuka Productions looked at the work and gave it their endorsement, nobody would bother to ask.

──So you found “Tezuka-ness” difficult to define.

Orihara: We actually used only some of Tezuka’s characters in the training data, not all of them. This was partly because of the technical difficulty of cropping out so many different faces, but more importantly, we thought that including characters with a truly unique look, such as Astro Boy or Black Jack, would exert too much influence on the model and make learning difficult.

As a result, the AI began to produce characters that were not included in the training data, some of which the manga artists found interesting. That was when I finally thought we could say that the AI had learned Tezuka’s style.

Izaki: For our StyleGAN demo, we had the model learn a lot of faces of celebrities, so the faces it created looked like they could belong to a celebrity. Similarly, the AI learned the faces of Osamu Tezuka’s characters via transfer learning and then produced faces that, even to the untrained eye, look like the kind of thing Tezuka might have drawn. The sharp glint in the characters’ eyes and their strength of will really come through. Even though GAN models are known for generating images that are similar but not identical to the training data, it was amazing to see something so “Osamu Tezuka-like.”

──There have been times when an artist is unable to continue their work for one reason or another. Even when that happens, fans are hungry for the next part of the story. What do you think will be necessary for AIs to become involved in the creative aspects of storytelling?

Izaki: That’s quite a difficult question. Fans of an artist love their work because it was the artist that drew it. The AI is just imitating them, so it isn’t easy to accept the AI’s output as the artist’s work. One way for it to be accepted would be if the artist endorsed the work but more important is to offer suggestions on how the work should be seen—whether it should be viewed as a sequel to the original or enjoyed on its own merits as an AI-made creation.

In the future, we will see more and more entertainment taking place in the metaverse, where our avatars represent us while participating in various forms of interaction with other avatars. These may include financial exchanges as well as commercial activities. So the question is whether this is something we can accept. If people grow used to the idea of an avatar acting as a proxy for a real person and are able to appreciate their output as something unique in its own right, I think that people will also come to accept AI-generated entertainment.

The content and profile are current as of the time of the interview (February 2022).